Project 2: Cardiac Arrhythmia Prediction (Binary Classification)

In this project, I built a model to classify if patients are suffering cardiac arrhythmia. The data used in this project was collected during some researches at H. Altay Guvenir Bilkent University, the dataset can be downloaded here at UCI platform.

To prepare the datasets before the model fitting, I used the custom framework I developed (published in this portfolio as Project 1). The following steps were followed:

-

Data Prep: feature types and target definition, null character (?) replacement.

-

Data Partition: splitting in train set (66%) and test set (33%).

-

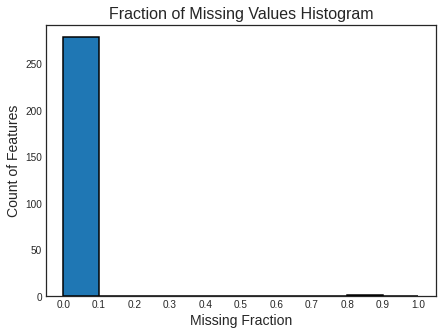

Imput Missing Values: filling of null values using the mean, for numerical features, and mode (most frequent category), for categorical features.

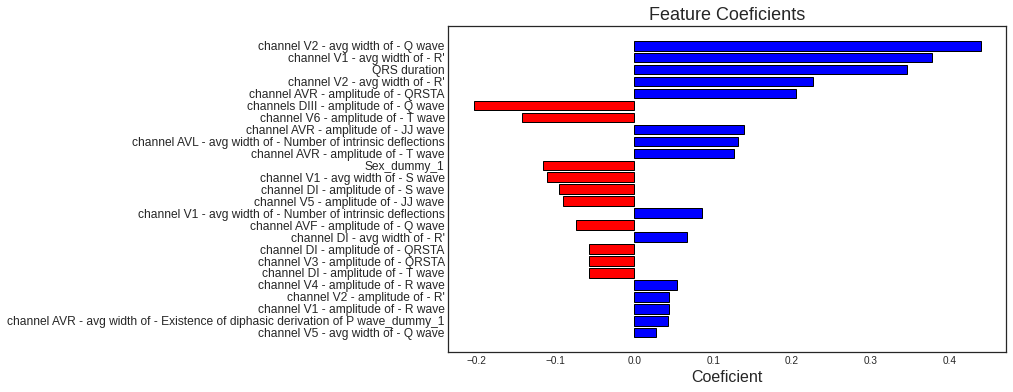

- Feature Selection (Lasso Regression): feature selection method using Regularized Logistic Regression (Lasso).

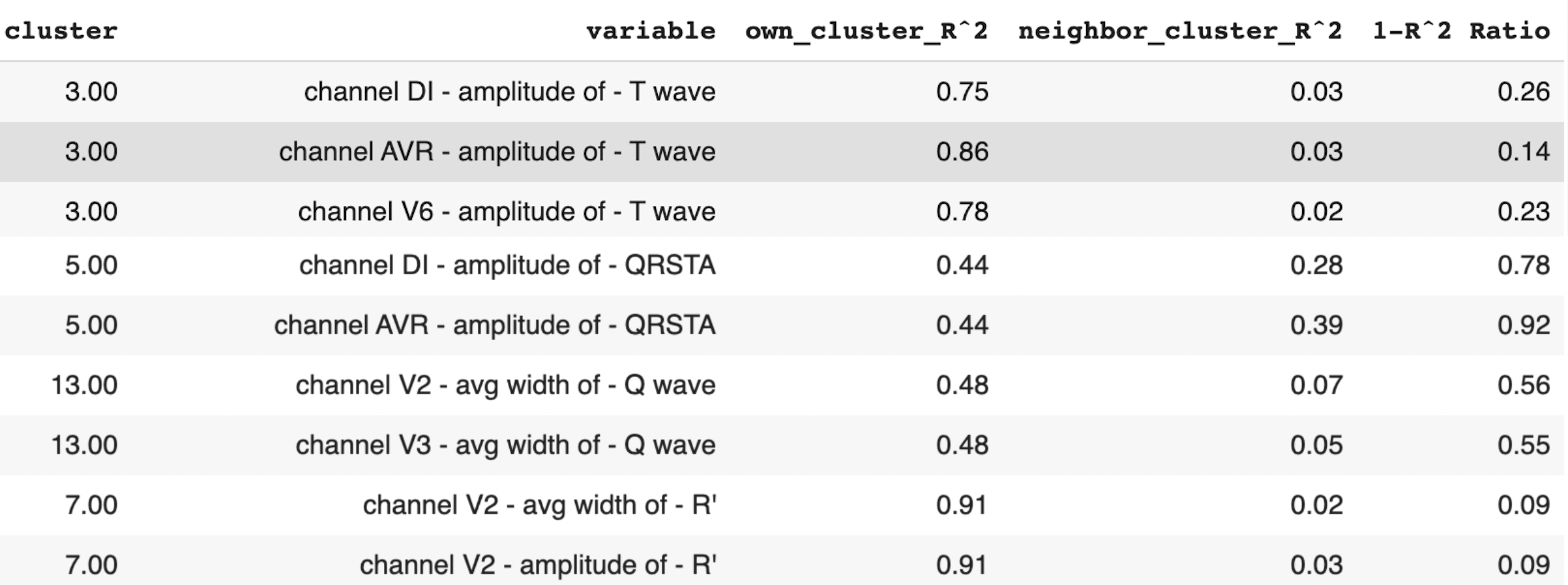

- Variable Clustering (Correlation Analysis): feature selection method based on variable clustering, with correlations as distance measure.

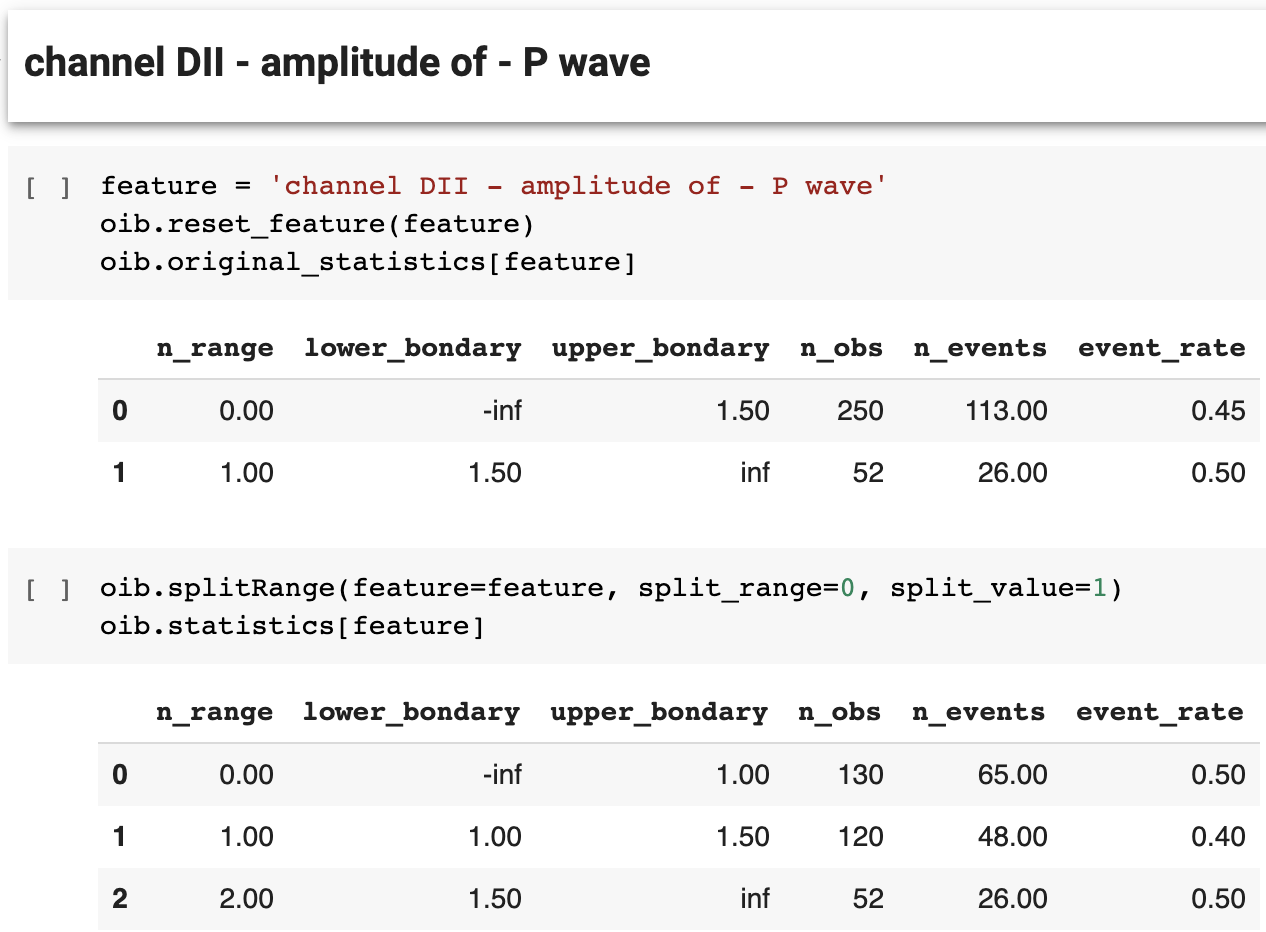

- Optimal Interval Binning: feature discretization of numerical features. We also change interactively, when necessary, the ranges configuration non-parsimony patterns along the ranges.

-

Dummy Transformer: dummy indicators creation for categorical features (original and recently created, like the ranges defined before).

-

Model Fitting: model fitting using Lasso Regression.

As described in following, I tested 3 distinct step combinations, to select the best between them:

-

Baseline Model: without any kind of feature selection, neither feature discretization.

-

Model 1: with both feature selection (Lasso Regression and Variable Clustering), and with feature discretization (Optimal Interval Binning), however with no changes in the computed ranges.

-

Model 2: with both feature selection (Lasso Regression and Variable Clustering), with feature discretization (Optimal Interval Binning), and employing changes in computed ranges or ignoring the discretization of features, when necessary (e.g. when the numerical feature has linear relation with target).

Results:

| Model | AUC Train | AUC Test |

|---|---|---|

| Baseline | 0.8991 | 0.8099 |

| Model 1 | 0.8757 | 0.8140 |

| Model 2 | 0.8957 | 0.8391 |

The Colab Notebook of this study is available here.

- Logistic Regression

- Lasso Regression

- Impute Missing Values

- Feature Selection

- Variable Clustering

- Feature Discretization